An introduction to {shinyValidator}

2022-11-14

David Granjon, Novartis

Welcome

Hi, I am David Granjon

Senior Software developer at Novartis.

We’re in for 2 hours of fun!

- Grab a ☕

- Make yourself comfortable 🛋 or 🧘

- Ask questions ❓

Program

- Introduction 10 min

- Setup {shinyValidator} 20 min

- Discover {shinyValidator} 30 min

- Customize {shinyValidator} 40 min

- Add CI/CD (if time allows)

- Q&A

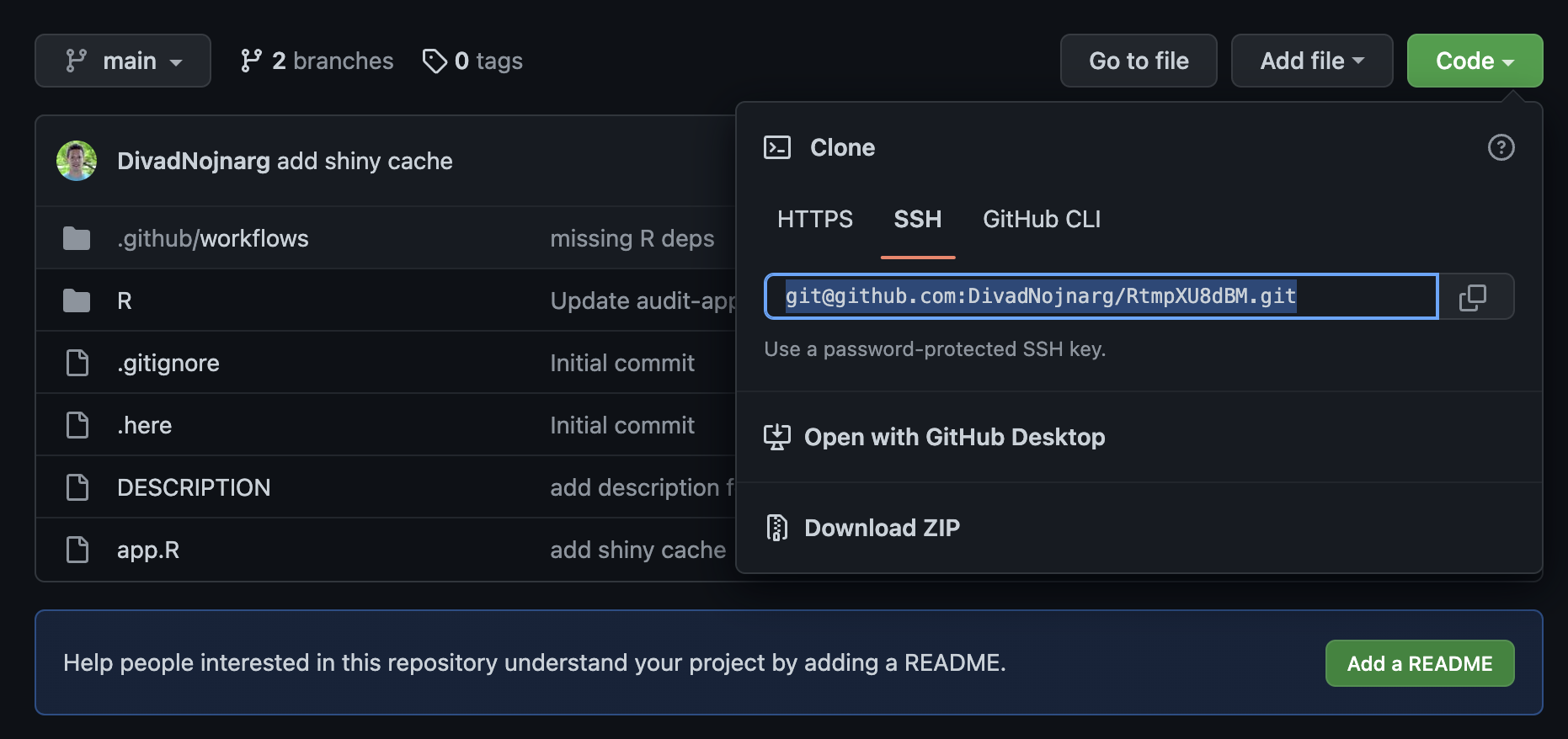

Workshop Material

Clone this repository with the RStudio IDE or via the command line.

Then run renv::restore() to install the dependencies.

Pre-requisites

If you want to run {shinyValidator} locally (not on CI/CD), you must have:

shinycannoninstalled for the load-test part. See here.A chrome browser installed like chromium.

gitinstalled and a GitHub account.A recent R version, if possible

R>= 4.1.0.

Introduction

Clothes don’t make the man

Your app may be as beautiful and as cool as you want, it is useless if it does not start/run.

From prototype to production

How do we transition❓

Reliable : is the app doing what it is intended to do?

Stable : how often does it crash?

Available : is the app fast enough to handle multiple concurrent users?

In practice, a few apps meet all these requirements 😈.

Available tools

- Easier checking, linting, documentation and testing.

- Just … easier. 😀

- Fix package versions.

- Increased reproducibility.

- Unit tests: test business logic.

- Server testing: test how Shiny modules or pieces work together (with reactivity).

- UI testing: test UI components, snapshots, headless-testing (shinytest2).

Are there bottlenecks?

- Load testing: How does the app behave with 10 simultaneous user? shinyloadtest.

- Profiling: What part of my app is slow?profvis.

- Reactivity: Are there any reactivity issues?

.

Automate: CI/CD

- Continuous integration: automatically check new features. 🏥

- Continuous deployment: automatically deploy content. ✉️

- Running on a remote environment ☁️:

- Automated.

- More reproducible (more os/R flavors available).

- Time saver.

- Less duplication.

Not easy 😢

- Select DevOps platform (GitLab, GitHub, …).

- Add version control (git knowledge).

- Build custom GitLab runner (optional).

- Write CI/CD instructions (better support for GitHub).

Can’t we make things easier❓

Stop … I am lost …

- There are just so many tools! How to use them properly?

- Is there a way to automate all of this? I just don’t have time … 😞

Welcome {shinyValidator}

- Integrate all previous mentioned tools.

- Produces a single HTML report output.

- Flexible.

Setup {shinyValidator}

{golem}

We create an empty golem project1:

{golem}

We add some useful files, basic test and link to git:

Put some real server code

Create empty GitHub repo

Browse to GitHub and create an empty repository called <PKG> matching the previously created package.

Add remote repo to local

Go to terminal tab under RStudio:

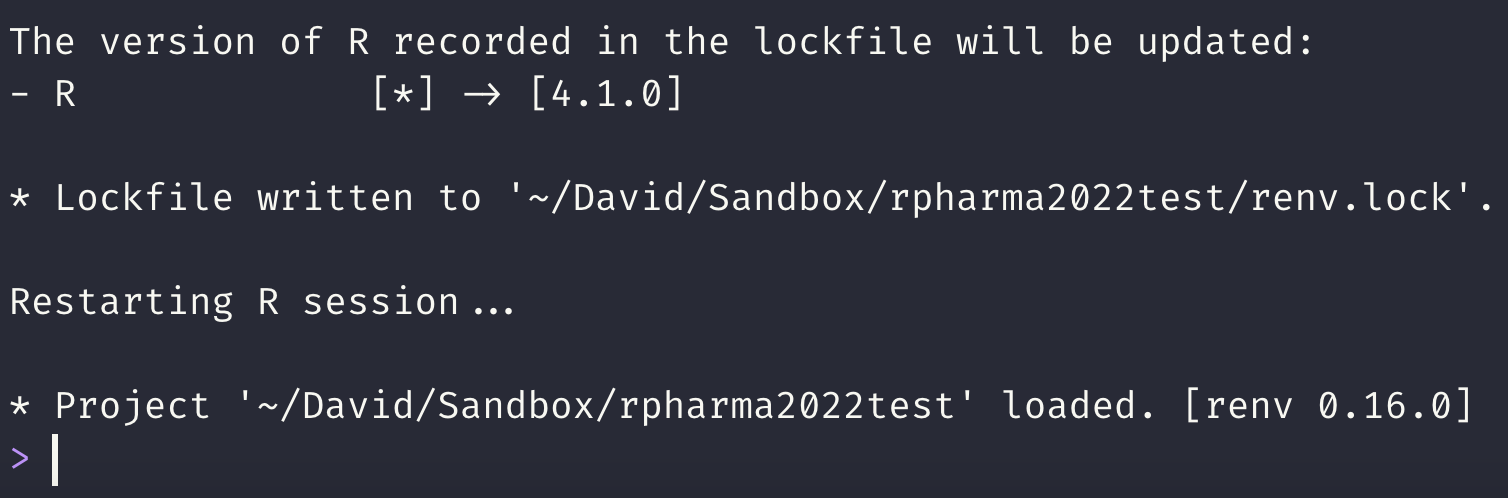

{renv}

Initialize renv for R package dependencies:

{renv}

system("echo 'RENV_PATHS_LIBRARY_ROOT = ~/.renv/library' >> .Renviron")

# SCAN the project and look for dependencies

renv::init()

# install missing packages

renv::install("<PACKAGE>")

# Capture new dependencies after package installation

renv::snapshot()system("echo 'RENV_PATHS_LIBRARY_ROOT = ~/.renv/library' >> .Renviron")

# SCAN the project and look for dependencies

renv::init()

# install missing packages

renv::install("<PACKAGE>")

# Capture new dependencies after package installation

renv::snapshot()system("echo 'RENV_PATHS_LIBRARY_ROOT = ~/.renv/library' >> .Renviron")

# SCAN the project and look for dependencies

renv::init()

# install missing packages

renv::install("<PACKAGE>")

# Capture new dependencies after package installation

renv::snapshot()system("echo 'RENV_PATHS_LIBRARY_ROOT = ~/.renv/library' >> .Renviron")

# SCAN the project and look for dependencies

renv::init()

# install missing packages

renv::install("<PACKAGE>")

# Capture new dependencies after package installation

renv::snapshot()

Install {shinyValidator}

Review the file structure

{shinyValidator}: step by step

Overall concept

%%{init: {'theme':'dark'}}%%

flowchart TD

subgraph CICD

direction TB

subgraph DMC

direction LR

E[Lint] --> F[Quality]

F --> G[Performance]

end

subgraph POC

direction LR

H[Lint] --> I[Quality]

end

end

A(Shiny Project) --> B(DMC App)

A --> C(Poof of concept App POC)

B --> |strict| D[Expectations]

C --> |low| D

D --> CICD

CICD --> |create| J(Global HTML report)

J --> |deploy| K(Deployment server)

click A callback "Tooltip for a callback"

click B callback "DMC: data monitoring committee"

click D callback "Apps have different expectations"

click E callback "Lint code: check code formatting, style, ..."

click F callback "Run R CMD check + headless crash test (shinytest2)"

click G callback "Optional tests: profiling, load test, ..."

click J callback "HTML reports with multiple tabs"

click K callback "RStudio Connect, GitLab/GitHub pages, ..."

Audit app

audit_app() is the main function 1:

- headless actions: pass shinytest2 instructions.

- timeout: wait app to start.

-

…: parameters to pass to

run_app()such as database logins, … - scope: predefined set of parameters (see examples).

Audit app: example

%%{init: {'theme':'dark'}}%%

graph TD

A(Check) --> B(Crashtest)

B --> C(Loadtest)

C --> D(Coverage)

D --> E(Reactivity)

click A callback "devtools::check"

click B callback "{shinytest2}"

click C callback "{shinyloadtest}"

click D callback "{covr}"

click E callback "{reactlog}"

Audit app: using scope parameter

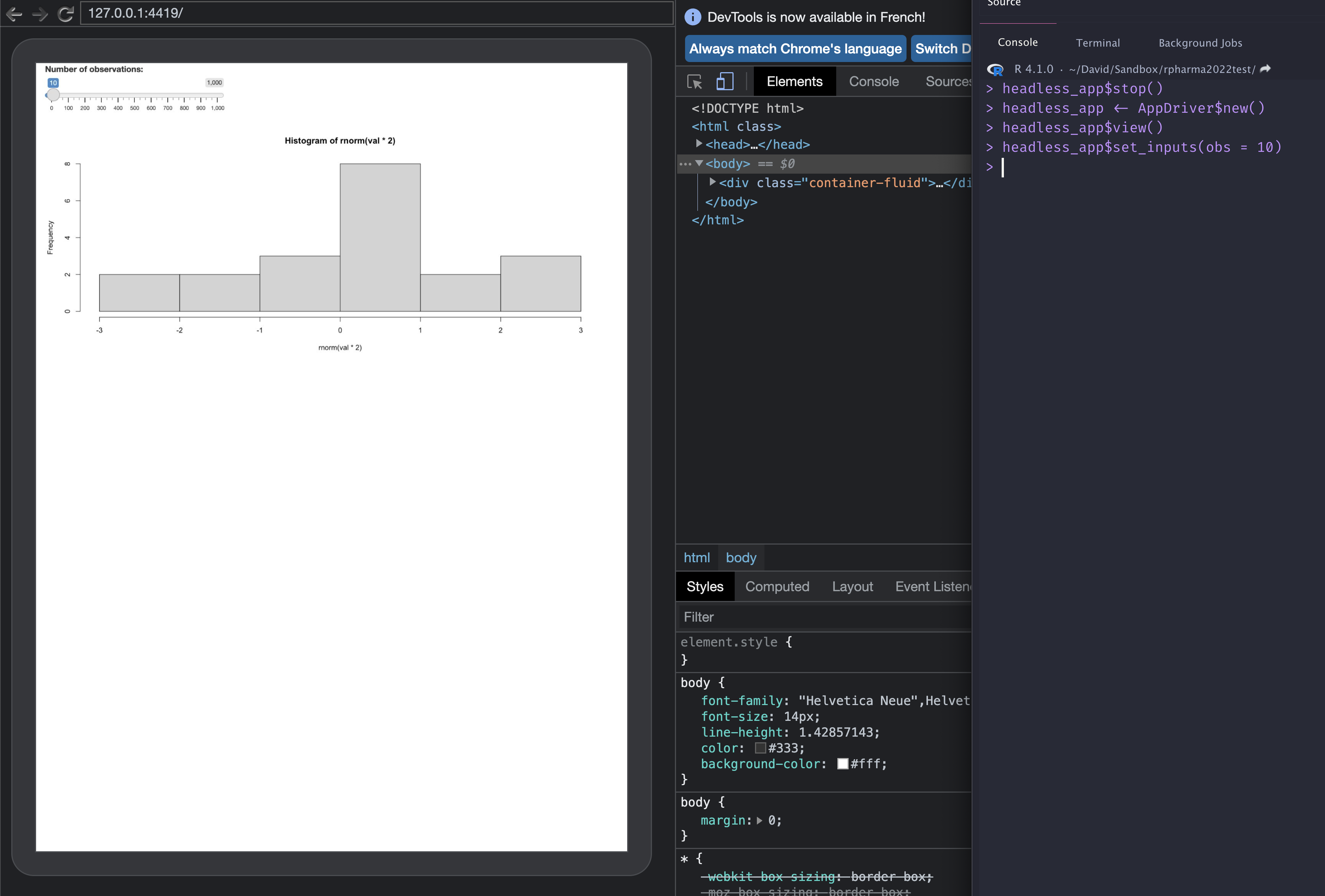

Audit app: headless manipulation (1/2)

This code is run during crash test, profiling and reactivity check.

Headless manipulation: your turn 🎮 (2/2)

Run the following code step by step1:

# Start the app library(shinytest2) headless_app <- AppDriver$new("./app.R") # View the app for debugging (does not work from Workbench!) headless_app$view() headless_app$set_inputs(obs = 1) headless_app$get_value(input = "obs") # You can also run JS code! headless_app$run_js( "$('#obs') .data('shiny-input-binding') .setValue( $('#obs'), 100 ); " ) # Now you can call any function # Close the connection before leaving headless_app$stop()# Start the app library(shinytest2) headless_app <- AppDriver$new("./app.R") # View the app for debugging (does not work from Workbench!) headless_app$view() headless_app$set_inputs(obs = 1) headless_app$get_value(input = "obs") # You can also run JS code! headless_app$run_js( "$('#obs') .data('shiny-input-binding') .setValue( $('#obs'), 100 ); " ) # Now you can call any function # Close the connection before leaving headless_app$stop()# Start the app library(shinytest2) headless_app <- AppDriver$new("./app.R") # View the app for debugging (does not work from Workbench!) headless_app$view() headless_app$set_inputs(obs = 1) headless_app$get_value(input = "obs") # You can also run JS code! headless_app$run_js( "$('#obs') .data('shiny-input-binding') .setValue( $('#obs'), 100 ); " ) # Now you can call any function # Close the connection before leaving headless_app$stop()# Start the app library(shinytest2) headless_app <- AppDriver$new("./app.R") # View the app for debugging (does not work from Workbench!) headless_app$view() headless_app$set_inputs(obs = 1) headless_app$get_value(input = "obs") # You can also run JS code! headless_app$run_js( "$('#obs') .data('shiny-input-binding') .setValue( $('#obs'), 100 ); " ) # Now you can call any function # Close the connection before leaving headless_app$stop()# Start the app library(shinytest2) headless_app <- AppDriver$new("./app.R") # View the app for debugging (does not work from Workbench!) headless_app$view() headless_app$set_inputs(obs = 1) headless_app$get_value(input = "obs") # You can also run JS code! headless_app$run_js( "$('#obs') .data('shiny-input-binding') .setValue( $('#obs'), 100 ); " ) # Now you can call any function # Close the connection before leaving headless_app$stop()# Start the app library(shinytest2) headless_app <- AppDriver$new("./app.R") # View the app for debugging (does not work from Workbench!) headless_app$view() headless_app$set_inputs(obs = 1) headless_app$get_value(input = "obs") # You can also run JS code! headless_app$run_js( "$('#obs') .data('shiny-input-binding') .setValue( $('#obs'), 100 ); " ) # Now you can call any function # Close the connection before leaving headless_app$stop()

About monkey testing (1/2)

run_crash_test() runs a gremlins.js test if no headless action are passed:

- Chapters

- descriptions off, selected

- captions settings, opens captions settings dialog

- captions off, selected

This is a modal window.

Beginning of dialog window. Escape will cancel and close the window.

End of dialog window.

About monkey testing (2/2)

Your turn 👩🔬

- Run

./app.Rin an external browser. - Open the developer tools (ctrl + shift (Maj) + I for Windows, option + command + I on Mac).

- Browse to https://marmelab.com/gremlins.js/ and copy the Bookmarklet Code on the right.

- Copy this code into the Shiny app HTML inspector JS console.

- Enjoy that moment.

Report example

Your turn 🎮

- From the R console, call

shinyValidator::audit_app(scope = "POC"). - Look at the logs messages.

- When done open

public/index.html(external browser). - Explore the report.

- Modify app code and rerun …

Pro tip

Cleanup between each run!

Improve

Your turn 🎮

Disable other checks

Modify shinyValidator::audit_app parameters:

Add server testing

Add server testing

usethis::use_test("app-server-test")

# Inside app-server-test

testServer(app_server, {

session$setInputs(obs = 0)

# There should be an error

expect_error(output$distPlot)

session$setInputs(obs = 100)

str(output$distPlot)

})

# Test it

devtools::test()usethis::use_test("app-server-test")

# Inside app-server-test

testServer(app_server, {

session$setInputs(obs = 0)

# There should be an error

expect_error(output$distPlot)

session$setInputs(obs = 100)

str(output$distPlot)

})

# Test it

devtools::test()usethis::use_test("app-server-test")

# Inside app-server-test

testServer(app_server, {

session$setInputs(obs = 0)

# There should be an error

expect_error(output$distPlot)

session$setInputs(obs = 100)

str(output$distPlot)

})

# Test it

devtools::test()usethis::use_test("app-server-test")

# Inside app-server-test

testServer(app_server, {

session$setInputs(obs = 0)

# There should be an error

expect_error(output$distPlot)

session$setInputs(obs = 100)

str(output$distPlot)

})

# Test it

devtools::test()usethis::use_test("app-server-test")

# Inside app-server-test

testServer(app_server, {

session$setInputs(obs = 0)

# There should be an error

expect_error(output$distPlot)

session$setInputs(obs = 100)

str(output$distPlot)

})

# Test it

devtools::test()usethis::use_test("app-server-test")

# Inside app-server-test

testServer(app_server, {

session$setInputs(obs = 0)

# There should be an error

expect_error(output$distPlot)

session$setInputs(obs = 100)

str(output$distPlot)

})

# Test it

devtools::test()usethis::use_test("app-server-test")

# Inside app-server-test

testServer(app_server, {

session$setInputs(obs = 0)

# There should be an error

expect_error(output$distPlot)

session$setInputs(obs = 100)

str(output$distPlot)

})

# Test it

devtools::test()Run shinyValidator::audit_app and have a look at the coverage tab.

Customize Crash test

Leverage shinytest2 power1, app being the Shiny app to audit.

Run the above code and have a look at the screenshots.

Output checks (1/3)

Create this function in helpers.R:

Add it to app_server.R:

Enable output check in shinyValidator::audit_app:

Output checks (2/3)

Create a new test:

Output checks (2/3)

usethis::use_test("test-base-plot")

renv::install("vdiffr")

# Inside test-base-plot

test_that("Base plot OK", {

set.seed(42) # to avoid the test from failing due to randomness :)

vdiffr::expect_doppelganger("Base graphics histogram", make_hist(500))

})

# Test it

devtools::test()usethis::use_test("test-base-plot")

renv::install("vdiffr")

# Inside test-base-plot

test_that("Base plot OK", {

set.seed(42) # to avoid the test from failing due to randomness :)

vdiffr::expect_doppelganger("Base graphics histogram", make_hist(500))

})

# Test it

devtools::test()usethis::use_test("test-base-plot")

renv::install("vdiffr")

# Inside test-base-plot

test_that("Base plot OK", {

set.seed(42) # to avoid the test from failing due to randomness :)

vdiffr::expect_doppelganger("Base graphics histogram", make_hist(500))

})

# Test it

devtools::test()usethis::use_test("test-base-plot")

renv::install("vdiffr")

# Inside test-base-plot

test_that("Base plot OK", {

set.seed(42) # to avoid the test from failing due to randomness :)

vdiffr::expect_doppelganger("Base graphics histogram", make_hist(500))

})

# Test it

devtools::test()usethis::use_test("test-base-plot")

renv::install("vdiffr")

# Inside test-base-plot

test_that("Base plot OK", {

set.seed(42) # to avoid the test from failing due to randomness :)

vdiffr::expect_doppelganger("Base graphics histogram", make_hist(500))

})

# Test it

devtools::test()Output checks (3/3)

Performance: Code profiling (1/2)

Performance: Code profiling (2/3)

Modify the custom headless script by adding a timeout:

Run shinyValidator::audit_app and have a look at the profiling tab.

Performance: Code profiling (3/3)

Performance: Load testing (1/2)

Performance: Load testing (2/2)

Let’s add CI/CD

Pipeline output

{shinyValidator} CI/CD file

In case you need to control branches triggering {shinyValidator}:

If you have to change the R version, os, …:

- name: Lint code

shell: Rscript {0}

run: shinyValidator::lint_code()

- name: Audit app 🏥

shell: Rscript {0}

run: shinyValidator::audit_app()

- name: Deploy to GitHub pages 🚀

if: github.event_name != 'pull_request'

uses: JamesIves/github-pages-deploy-action@4.1.4

with:

clean: false

branch: gh-pages

folder: publicExample: disable other checks

Modify GitHub actions yaml file:

Run our first pipeline

- Make sure GitHub Pages is enabled.

- Commit and push the code to GitHub.

- You can follow the GitHub actions logs.

- When done, open the report an discuss results.

- Time to add some real things!

What’s next?

Test with your own app

Get a top notch app? Try to setup {shinyValidator} and run it.

Be patient

CI/CD and testing are not easy!

Recommended reading

Mastering Shiny UI

Thank you!

Follow me on Fosstodon. @davidgranjon@fosstodon.org

Disclaimer

- This presentation is based on publicly available information (including data relating to non-Novartis products or approaches).

- The views presented are the views of the presenter, not necessarily those of Novartis.

- These slides are indented for educational purpose only and for the personal use of the audience. These slides are not intended for wider distribution outside the intended purpose without presenter approval.

- The content of this slide deck is accurate to the best of the presenter’s knowledge at the time of production.